If you’ve been meaning to learn American Sign Language (ASL), or just want to brush up on your vocabulary, Nvidia has announced a free new tool, alongside the American Society for Deaf Children and agency Hello Monday, that harnesses AI to teach prospective users how to speak.

Announced in the Nvidia Blog, this new tool’s announcement post laments the fact that, despite being the “third most prevalent language in the United States”, AI tools aren’t as prominently teaching Americans ASL as they are English and Spanish.

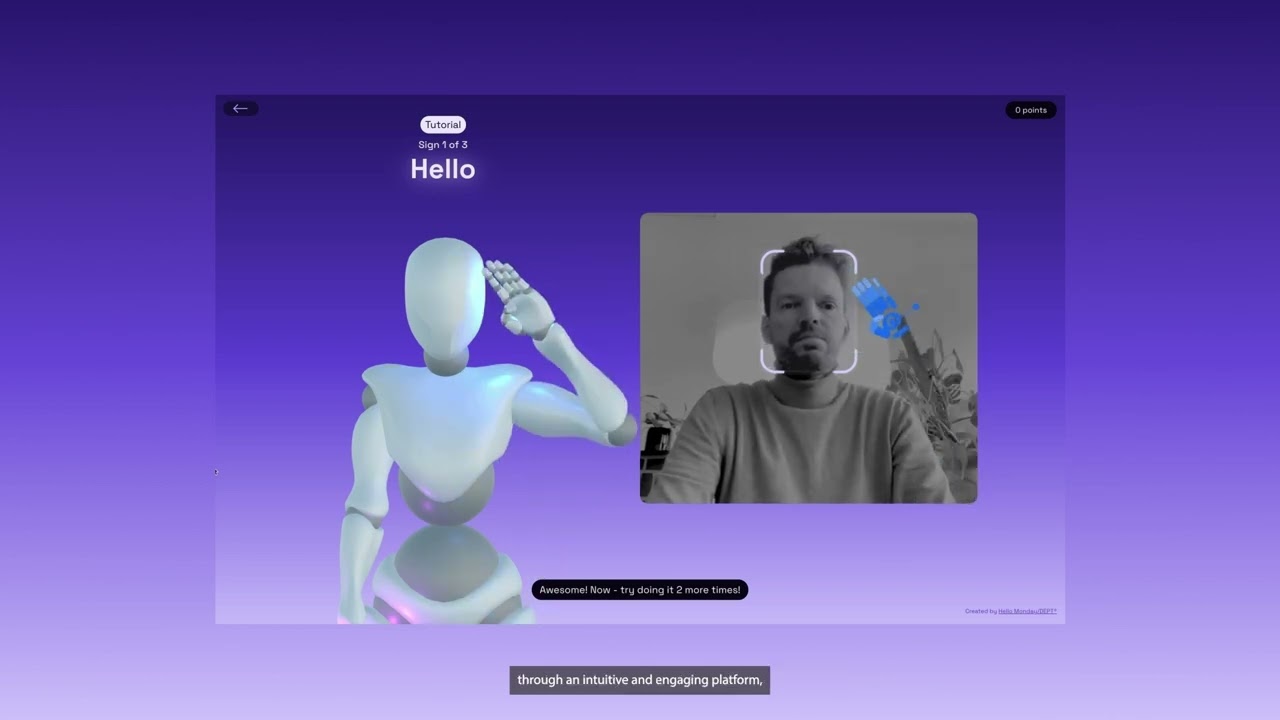

Signs, Nvidia’s new ASL learning platform, uses footage from your webcam to correct or instruct you on your sign language. Notably, you can’t currently use Signs without allowing it access to your webcam. This is because the app directly gives you feedback on how you sign and how to be clearer in the way you move your hands.

As of right now, Nvidia has access to a database of “400,000 video clips representing 1,000 signed words”, which is then validated by ASL speakers. Effectively, Nvidia’s AI is used to interpret signs, categorise them, and then pass them on to real-life users who can validate findings.

Though we don’t yet have any confirmation on other forms of sign language, the press release states:

“NVIDIA teams plan to use this dataset to further develop AI applications that break down communication barriers between the deaf and hearing communities”

ASL is more complex than simple gestures and can be influenced by facial expressions so the team behind Signs’ next step is to figure out how to interpret those into its corrections and teachings.

As well as this, a future version of the tool is intended to incorporate “regional variations and slang terms”.

The dataset will supposedly be released later this year, but for now, you can learn ASL or contribute to the honing of the app by visiting the Signs website.

The mention of AI may throw up warning signs but this use of it seems different to more than just compiling (or scraping copyrighted) data, like common parlance of the term may suggest. Signs actively attempts to interpret gestures, something that AI is uniquely good at doing. The fact that it all goes through a human at the end of the chain, who can verify authenticity should help stop the data from skewing or hallucinating.

Also, the fact that it’s being used to teach an oft-overlooked language, and not generate glossy pictures of emojis makes its use of AI even better. If it can build meaningfully on this start to include more gestures and dialects, Nvidia could have a real winner on its hands.